What is the reason behind the drift of western culture into empty nihilistic materialistic hedonism? Dr. Jordan Peterson joins Stefan Molyneux to discuss the complicated nature of cultural division, the reduction of personal responsibility, the danger of not "having meaning" in your life, the nature of ideology, developing a sense of efficacy in the world, suffering as an intrinsic component to human nature, the argument for free will and much more!

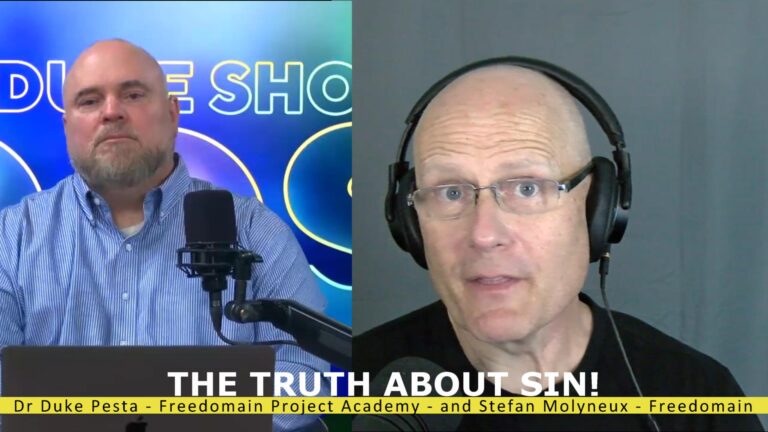

We chat again with Dr. Duke Pesta, this time on THE TRUTH ABOUT SIN!

We explore gratitude and its historical significance, emphasizing its role in our relationship with God. We discuss self-ownership and how it sets humans apart from animals, as well as the concept of free will and its connection to consciousness. We address the lack of gratitude in society and its impact, and also delve into the issue of sin and Jesus' perspective on it. We discuss the importance of faith, evaluate different ideologies, and highlight the significance of actively loving others.

Noam Chomsky speaks with Stefan Molyneux about the Israeli-Palestinian conflict and how United States involvement has impacted peace talks. Also includes: Jewish-state worship, totalitarian streaks in Jewish thinking, the mainstream media filter, how discussions evolve (or doesn't) through the generations, a road-map to peace and a diplomatic two-state solution.

"What are your thoughts on the morality of gambling and making money from gamblers? It doesnt seem to violate UPB or the non-aggression principle, but to me its always seemed scummy and manipulative. Instead of providing any value these businesses make money off of dumb people's inability to discern probabilities."

Join the PREMIUM philosophy community on the web for free!

Get my new series on the Truth About the French Revolution, the Truth About Sadism, access to the audiobook for my new book 'Peaceful Parenting,' StefBOT-AI, private livestreams, premium call in shows, the 22 Part History of Philosophers series and more!

See you soon!

https://freedomain.locals.com/support/promo/UPB2022

Support the show, using a variety of donation methods

Support the show